Article body copy

“I don’t know much about whales. I have never seen a whale in my life,” says Michael Bronstein. The Israeli computer scientist, teaching at Imperial College London, England, might not seem the ideal candidate for a project involving the communication of sperm whales. But his skills as an expert in machine learning could be key to an ambitious endeavor that officially started in March 2020: an interdisciplinary group of scientists wants to use artificial intelligence (AI) to decode the language of these marine mammals. If Project CETI (for Cetacean Translation Initiative) succeeds, it would be the first time that we actually understand what animals are chatting about—and maybe we could even have a conversation with them.

It started in 2017 when an international group of scientists spent a year together at Harvard University in Cambridge, Massachusetts, at the Radcliffe Fellowship, a program that promises “an opportunity to step away from usual routines.” One day, Shafi Goldwasser, a computer scientist and cryptography expert also from Israel, came by the office of David Gruber, a marine biologist at City University of New York. Goldwasser, who had just been named the new director of the Simons Institute for the Theory of Computing at the University of California, Berkeley, had heard a series of clicking sounds that reminded her of the noise a faulty electronic circuit makes—or of Morse code. That’s how sperm whales talk to each other, Gruber told her. “I said, ‘Maybe we should do a project where we are translating the whale sounds into something that we as humans can understand,’” Goldwasser recounts. “I really said it as an afterthought. I never thought he was going to take me seriously.”

But the fellowship was an opportunity to take far-out ideas seriously. At a dinner party, they presented the idea to Bronstein, who was following recent advancements in natural language processing (NLP), a branch of AI that deals with the automated analysis of written and spoken speech—so far, just human language. Bronstein was convinced that the codas, as the brief sperm whale utterances are called, have a structure that lends them to this kind of analysis. Fortunately, Gruber knew a biologist named Shane Gero who had been recording a lot of sperm whale codas in the waters around the Caribbean island of Dominica since 2005. Bronstein applied some machine-learning algorithms to the data. “They seemed to be working very well, at least with some relatively simple tasks,” he says. But this was no more than a proof of concept. For a deeper analysis, the algorithms needed more context and more data—millions of whale codas.

But do animals have language at all? The question has been controversial among scientists for a long time. For many, language is one of the last bastions of human exclusivity. Animals communicate, but they do not speak, said Austrian biologist Konrad Lorenz, one of the pioneers of the science of animal behavior, who wrote about his own communications with animals in his 1949 book King Solomon’s Ring. “Animals do not possess a language in the true sense of the word,” Lorenz wrote.

“I rather think that we haven’t looked closely enough yet,” counters Karsten Brensing, a German marine biologist who has written multiple books on animal communication. Brensing is convinced that the utterances of many animals can certainly be called language. This isn’t simply about the barking of dogs: several conditions have to be met. “First of all, language has semantics. That means that certain vocalizations have a fixed meaning that does not change.” Siberian jays, a type of bird, for example, are known to have a vocabulary of about 25 calls, some of which have a fixed meaning.

The second condition is grammar: rules for how to build sentences. For a long time, scientists were convinced that animal communication lacked any sentence structure. But in 2016, Japanese researchers published a study in Nature Communications on the vocalizations of great tits. In certain situations, the birds combine two different calls to warn each other when a predator approaches. They also reacted when the researchers played this sequence to them. However, when the call order was reversed, the birds reacted far less. “That’s grammar,” says Brensing.

The third criterion: you wouldn’t call the vocalizations of an animal species a language if they are completely innate. Lorenz believed that animals were born with a repertoire of expressions and did not learn much in the course of their lives. “All expressions of animal emotions, for instance, the ‘Kia’ and ‘Kiaw’ note of the jackdaw, are therefore not comparable to our spoken language, but only to those expressions such as yawning, wrinkling the brow and smiling, which are expressed unconsciously as innate actions,” Lorenz wrote.

Several animal species have proved to be vocal learners—acquiring new vocabulary, developing dialects, identifying each other by name. Some birds even learn to imitate cellphone ringtones. Dolphins acquire individual whistles that they use as an identifier for themselves, almost like a name.

Sperm whales dive deep into the ocean and communicate over long distances via a system of clicks. Photo by Amanda Cotton/Project CETI

The clicks of sperm whales are ideal candidates for attempting to decode their meanings—not just because, unlike continuous sounds that other whale species produce, they are easy to translate into ones and zeros. The animals dive down into the deepest ocean depths and communicate over great distances, so they cannot use body language and facial expressions, which are important means of communication for other animals. “It is realistic to assume that whale communication is primarily acoustic,” says Bronstein. Sperm whales have the largest brains in the animal kingdom, six times the size of ours. When two of these animals chatter with each other for an extended period of time, shouldn’t we wonder whether they have something to say to each other? Do they give each other tips on the best fishing grounds? Do whale moms exchange stories about raising their offspring, like their human counterparts? It’s worth trying to find out, say the CETI researchers.

Learning an unknown language is easier if there is something like the famous Rosetta Stone. This stele, discovered in 1799, contains the same text in three languages and was the key to deciphering Egyptian hieroglyphics. Of course, there is no such thing for the animal kingdom. We have neither a human-whale dictionary nor a book with grammatical rules of the sperm whale language.

But there are ways around that. Obviously, children learn their native language without these tools, just by observing the language spoken around them. Researchers have concluded that this kind of learning is basically statistical: the child remembers that the word dog is being uttered a lot when that furry animal enters the room, that certain words are often used in connection with certain others, that a specific sequence of words is more likely than another. In the last 10 years, machine-learning methods have mimicked this type of learning. Researchers fed big neural networks with huge amounts of language data. And those networks could find structures in languages from statistical observations, without being told anything about the content.

One example is the so-called language models, of which the best known is GPT-3, developed by the company OpenAI. Language models are completion machines—GPT-3, for example, is given the beginning of a sentence and completes it word by word, in a similar way to the suggestions that smartphones make when we type text messages, just a lot more sophisticated. By statistically processing huge amounts of text pulled from the internet, language models not only know which words appear together frequently, they also learn the rules of composing sentences. They create correct-sounding sentences, and often ones of strikingly good quality. They are capable of writing fake news articles on a given topic, summarizing complex legal texts in simple terms, and even translating between two languages.

These feats come at a price: huge amounts of data are required. Programmers trained GPT-3’s neural network with about 175 billion words. By comparison, Gero’s Dominica Sperm Whale Project has collected less than 100,000 sperm whale codas. The first job of the new research project will be to vastly expand that collection, with the goal of collecting four billion words—although nobody knows yet what a “word” is in sperm whale language.

If Bronstein’s idea works, it is quite realistic to develop a system analogous to human language models that generates grammatically correct whale utterances. The next step would be an interactive chatbot that tries to engage in a dialogue with free-living whales. Of course, no one can say today whether the animals would accept it as a conversational partner. “Maybe they would just reply, ‘Stop talking such garbage!’” says Bronstein.

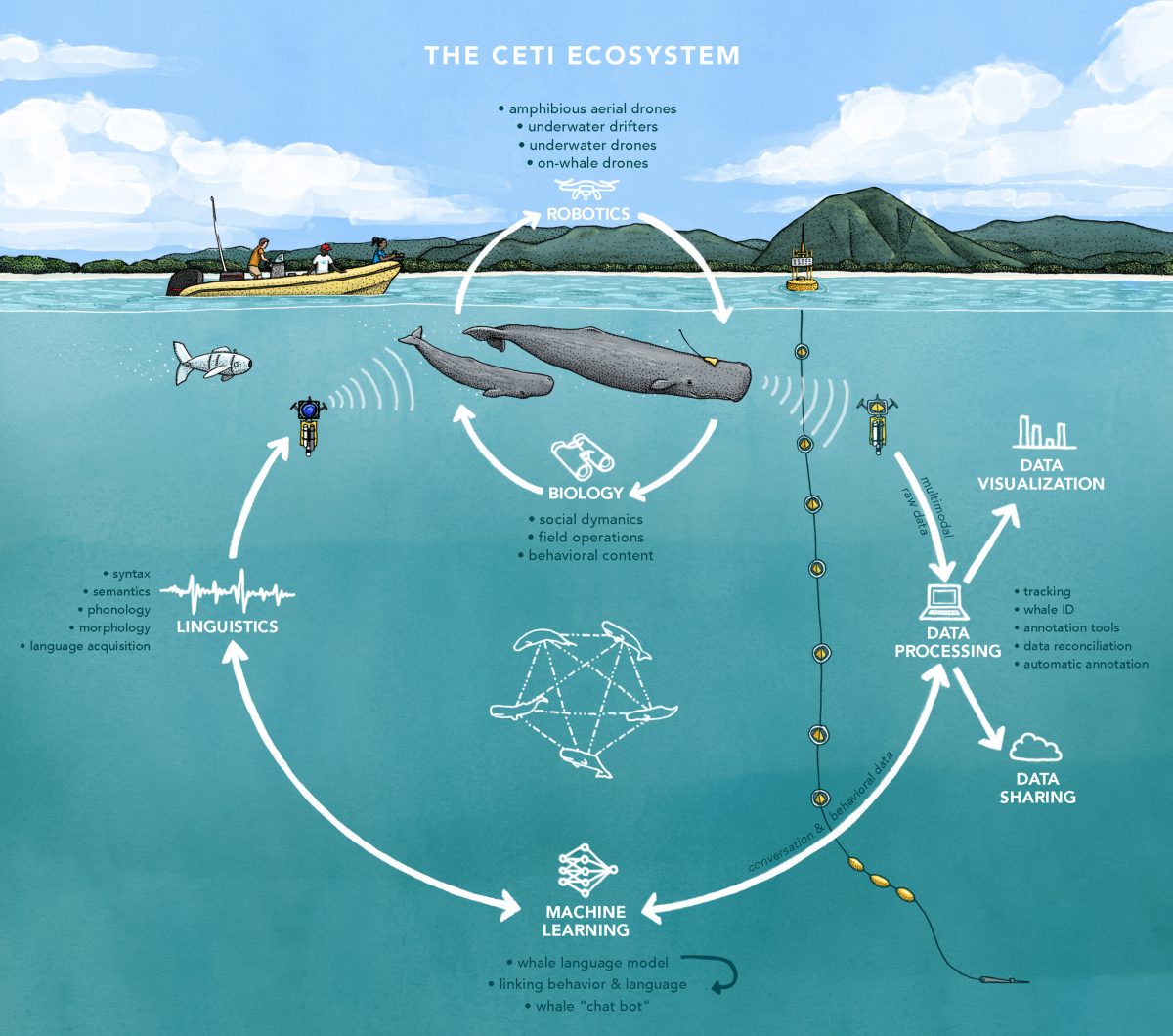

Researchers hope artificial intelligence (AI) will give them the key to understanding sperm whale communication. Illustration by Project CETI

But even if the idea works, the downside of all language models is that they don’t know anything about the content of the language in which they are chatting. It would be ironic if the researchers created a bot that could converse fluently with a whale, but then they couldn’t understand a word. That’s why they want to annotate the voice recordings with data on the whales’ behavior right from the start—where were the animals, who spoke to whom, what was the reaction? The challenge is to find an automated way to do at least some of these millions of annotations.

A lot of technology still has to be developed—sensors to record the individual whales and monitor their locations. Those are necessary to clearly assign individual sounds to a specific animal. Project CETI successfully applied for five years of funding from the Audacious Project run by TED, the conference organization. A number of organizations are part of the project, including the National Geographic Society and the Computer Science and Artificial Intelligence Laboratory at Massachusetts Institute of Technology (MIT).

The CETI researchers were not the first to come up with the idea of applying machine-learning techniques to animal languages. Aza Raskin, former physicist, designer, and entrepreneur turned critic of technology, had a similar idea back in 2013 when he heard about the complicated language of African gelada monkeys. Could we apply NLP technology that was developed to process human languages to animal vocalizations? He helped found the Earth Species Project with the aim of doing just that. At that time, the technology was in its infancy; it took another four years before it was developed into a working self-learning method for automated translation between languages. The word-embedding technique puts all the words of a language into a multidimensional galaxy where words often used together are close to each other, and those connections are represented by lines. For example, “king” relates to “man” as “queen” relates to “woman.”

It turned out that the maps of two human languages can be made to coincide, even though not every word from one language has an exact counterpart in the other. Today, this technique allows for translation between two human languages in written text, and soon it could be used on audio recordings without text.

But is it conceivable that we could overlay the maps of a human and an animal language? Raskin is convinced that this is possible, at least in principle. “There is almost certainly some kind of shared set of experiences, especially with other mammals. They need to breathe, they need to eat, they grieve their young after they die,” he says. At the same time, Raskin believes, there will be a lot of areas where the maps don’t fit. “I don’t know what is going to be more fascinating—the parts where we can do direct translation, or the parts where there is nothing which is directly translatable to the human experience.” Once animals speak for themselves and we can listen, says Raskin, we could have “really transformational cultural moments.”

No doubt this sperm whale mother and calf communicate, but researchers are wondering what they say to each other. Photo by Amanda Cotton/Project CETI

Certainly these hopes are getting a little ahead of the research. Some scientists are very skeptical about whether the collection of CETI data will contain anything interesting. Steven Pinker, the renowned linguist and author of the book The Language Instinct, sees the project with a fair amount of skepticism. “I’ll be curious to see what they find,” he writes in an email. However, he has little hope that we can find rich content and structure in the sperm whale codas. “I suspect it won’t be much beyond what we already know, namely that they are signature calls whose semantics is pretty much restricted to who they are, perhaps together with emotional calls. If whales could communicate complex messages, why don’t we see them using it to do complex things together, as we see in humans?”

Diana Reiss, a researcher from Hunter College, City University of New York, disagrees. “If people looked at you and me right now,” she says during a video interview, “I’m not doing much, nor are you, yet we’re communicating a great deal of meaningful things.” In the same manner, she thinks we don’t know much about what the whales might say to each other. “I think we can safely say we’re in a state of ignorance at this point,” she says.

Reiss has been working with dolphins for years and uses a simple underwater keyboard to communicate with them. She cofounded a group, Interspecies Internet, which explores ways to effectively communicate with animals. Among her cofounders are musician Peter Gabriel; Vinton Cerf, one of the developers of the internet; and Neil Gershenfeld, director of MIT’s Center for Bits and Atoms. Reiss welcomes CETI’s ambitions, especially its interdisciplinary approach.

The CETI researchers admit that their search for meaning in whale codas might not turn up anything interesting. “We understand that one of our greatest risks is that the whales could be incredibly boring,” says Gruber, the program lead. “But we don’t think this is the case. In my experience as a biologist, whenever I really looked at something closely, there has never been a time when I’ve been underwhelmed by animals.”

The name of the CETI project evokes SETI, the search for extraterrestrial intelligence, which has scanned the sky for radio signals of alien civilizations since the 1960s, so far without finding a single message. Since no sign of ET has been found, Bronstein is convinced we should try out our decoding skills on signals that we can detect here on Earth. Instead of pointing our antennas toward space, we can eavesdrop on a culture in the ocean that is at least as alien to us. “I think it is very arrogant to think that Homo sapiens is the only intelligent and sentient creature on Earth,” Bronstein says. “If we discover that there is an entire civilization basically under our nose—maybe it will result in some shift in the way that we treat our environment. And maybe it will result in more respect for the living world.”